Ceph 实践

准备工作:

- 关闭SELinux

1

2

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

setenforce 0

- 打开Ceph端口

1

2

3

# firewall-cmd --zone=public --add-port=6789/tcp --permanent

# firewall-cmd --zone=public --add-port=6800-7100/tcp --permanent

# firewall-cmd --reload

- 安装epel源

1

# yum -y install epel-relea

- 安装 ntp 同步时间

1

2

3

4

5

# yum -y install ntp ntpdate ntp-doc

# ntpdate 0.us.pool.ntp.org

# hwclock --systohc

# systemctl enable ntpd.service

# systemctl start ntpd.service

安装Ceph

安装Ceph源

1

2

# rpm -Uvh http://ceph.com/rpm-hammer/el7/noarch/ceph-release-1-1.el7.noarch.rpm

# yum update -y

安装ceph-deploy工具

1

# yum install ceph-deploy -y

创建安装Ceph的工作目录

1

# mkdir ~/ceph-installation

创建Monitor节点

1

#ceph-deploy new mon1 mon2 mon3

为每个节点安装Ceph包(包含Monitor节点与OSD节点)

1

# ceph-deploy install mon1 mon2 mon3 osd1

初始化Monitor节点

1

# ceph-deploy mon create-initial

安装一个OSD

- 分区:

1

2

3

4

parted /dev/sdb

mklabel gpt

mkpart primary 0% 50GB

mkpart primary xfs 50GB 100%

- 格式化数据分区

1

mkfs.xfs /dev/sdb2

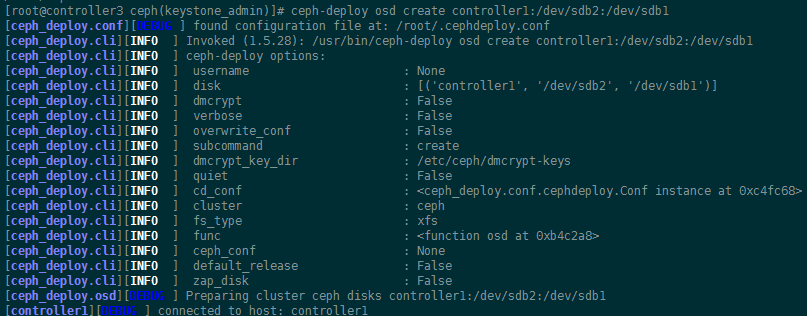

- 创建OSD

1

ceph-deploy osd create osd1:/dev/sdb2:/dev/sdb1

- 激活OSD

1

ceph-deploy osd activate osd1:/dev/sdb2:/dev/sdb1

同时创建多个

1

2

3

ceph-deploy osd create controller2:/dev/sdb2:/dev/sdb1 controller2:/dev/sdd2:/dev/sdd1 controller2:/dev/sde2:/dev/sde1

ceph-deploy osd activate controller2:/dev/sdb2:/dev/sdb1 controller2:/dev/sdd2:/dev/sdd1 controller2:/dev/sde2:/dev/sde1

注意点:

-

步骤1-6只需要在一台机器上,即安装节点上做

-

只有这两步必须在各个节点做

1

2

3

4

5

格式化数据分区

mkfs.xfs /dev/sdb2

创建OSD

ceph-deploy osd create osd1:/dev/sdb2:/dev/sdb1

-

机械硬盘

第一个盘的第一个分区给日志分区

-

SSD

SSD的多个分区给多个osd做log存储

基本配置与检查

1

[root@controller3 ceph]# ceph health

1

[root@controller3 ceph]# ceph -w

1

2

3

4

5

6

7

8

9

10

11

12

13

14

[root@controller2 ~(keystone_admin)]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 2.7T 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 500M 0 part /boot

└─sda3 8:3 0 2.7T 0 part

├─centos-swap 253:0 0 7.9G 0 lvm [SWAP]

├─centos-root 253:1 0 50G 0 lvm /

└─centos-home 253:2 0 2.7T 0 lvm /home

sdb 8:16 0 2.7T 0 disk

sdd 8:48 0 2.7T 0 disk

sde 8:64 0 2.7T 0 disk

loop0 7:0 0 2G 0 loop /srv/node/swiftloopback

loop2 7:2 0 20.6G 0 loop

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

[root@controller2 ~(keystone_admin)]# parted /dev/sdb

GNU Parted 3.1

Using /dev/sdb

Welcome to GNU Parted! Type 'help' to view a list of commands.

(parted) mklabel gpt

(parted) mkpart primary 0% 50GB

(parted) mkpart primary xfs 50GB 100%

(parted) p

Model: ATA HGST HUS724030AL (scsi)

Disk /dev/sdb: 3001GB

Sector size (logical/physical): 512B/512B

Partition Table: gpt

Disk Flags:

Number Start End Size File system Name Flags

1 1049kB 50.0GB 50.0GB primary

2 50.0GB 3001GB 2951GB primary

(parted)

1

2

3

4

5

6

7

8

9

10

11

12

13

[root@controller3 ceph]# ceph osd df

ID WEIGHT REWEIGHT SIZE USE AVAIL %USE VAR

0 2.67999 1.00000 2746G 37096k 2746G 0.00 1.03

7 2.67999 1.00000 2746G 35776k 2746G 0.00 0.99

8 2.67999 1.00000 2746G 35256k 2746G 0.00 0.98

1 2.67999 1.00000 2746G 36800k 2746G 0.00 1.02

5 2.67999 1.00000 2746G 35568k 2746G 0.00 0.99

6 2.67999 1.00000 2746G 35572k 2746G 0.00 0.99

2 2.67999 1.00000 2746G 36048k 2746G 0.00 1.00

3 2.67999 1.00000 2746G 36128k 2746G 0.00 1.00

4 2.67999 1.00000 2746G 35664k 2746G 0.00 0.99

TOTAL 24719G 316M 24719G 0.00

MIN/MAX VAR: 0.98/1.03 STDDEV: 0

1

2

3

4

5

6

7

8

9

10

[root@controller3 ceph]# ceph osd pool get rbd size

[root@controller3 ceph]# ceph osd pool set rbd pg_num 256

set pool 0 pg_num to 256

[root@controller3 ceph]# ceph osd pool set rbd pgp_num 256

Error EBUSY: currently creating pgs, wait

[root@controller3 ceph]# ceph osd pool set rbd pgp_num 256

set pool 0 pgp_num to 256

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

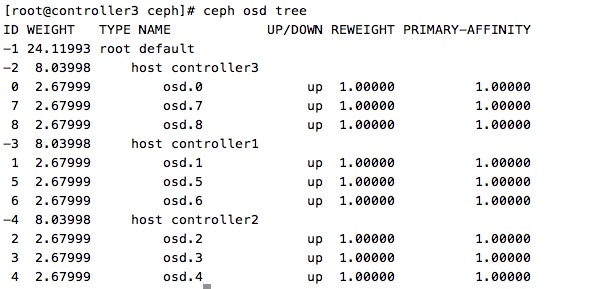

[root@controller3 ceph]# ceph osd tree

ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY

-1 24.11993 root default

-2 8.03998 host controller3

0 2.67999 osd.0 up 1.00000 1.00000

7 2.67999 osd.7 up 1.00000 1.00000

8 2.67999 osd.8 up 1.00000 1.00000

-3 8.03998 host controller1

1 2.67999 osd.1 up 1.00000 1.00000

5 2.67999 osd.5 up 1.00000 1.00000

6 2.67999 osd.6 up 1.00000 1.00000

-4 8.03998 host controller2

2 2.67999 osd.2 up 1.00000 1.00000

3 2.67999 osd.3 up 1.00000 1.00000

4 2.67999 osd.4 up 1.00000 1.00000

1

2

3

4

5

6

7

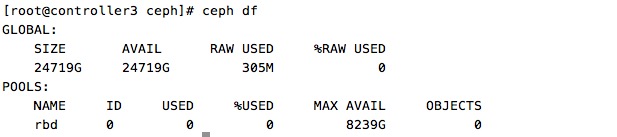

[root@controller3 ceph]# ceph df

GLOBAL:

SIZE AVAIL RAW USED %RAW USED

24719G 24719G 307M 0

POOLS:

NAME ID USED %USED MAX AVAIL OBJECTS

rbd 0 0 0 8239G 0